Ecoacoustics of London

We have created tools to make it easier to monitor the environment in London.

CityNet is a machine-learned system that measures the amount of audible sound made by wildlife and humans recorded using sound recorders. This can be used to give a very detailed picture of how biodiversity and human activities change over time.

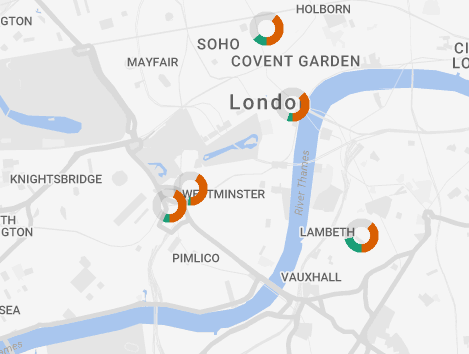

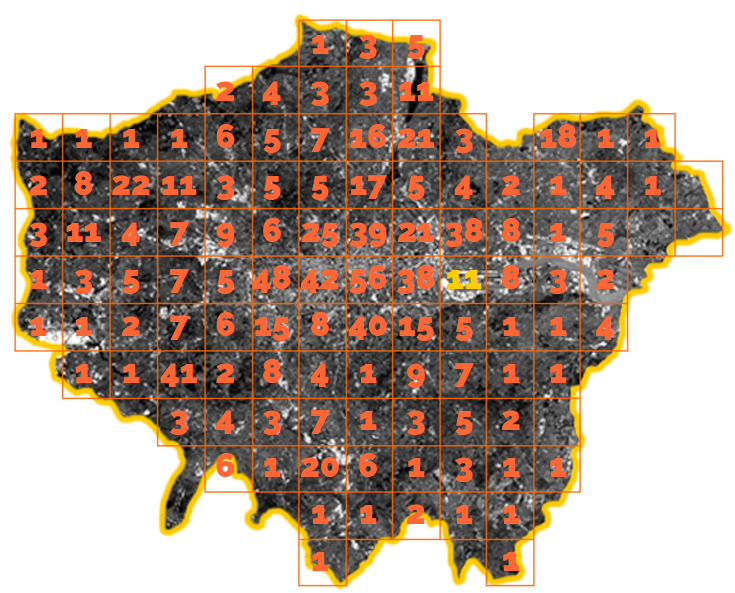

We use an interactive map to display the automatically-inferred trends in biodiversity and human sounds at 63 sites of green infrastructure in and around Greater London, UK.

Our dataset was created by leaving a sound recorder at each test site for a period of one week.

For each moment in time in our audio dataset, our system predicts if biotic sound (e.g. birds and insects) can be heard. We also predict if anthropogenic (human) sound can be heard, e.g. cars and voices.

It is possible to have both biotic sounds and anthropogenic sounds occuring at the same time. For example, a bird might be singing while a car drives past. Similarly, sometimes neither biotic nor anthropogenic sound can be heard, for example where the only sound is rain or silence.

Here, we give four example audio clips demonstrating some of the sounds that can be heard in our data.

| Biotic sounds | Anthropogenic sounds | |

|---|---|---|

|

Birdsong

|

||

|

Siren, Road traffic

|

||

|

Air traffic, siren, road traffic

|

Birdsong

|

|

Related projects and links

London Sound Survey

A collection of Creative Commons-licensed sound recordings of people, places and events in London.

Nature Smart Cities

A project bringing together environmental researchers and technologists to develop the world's first end-to-end open source system for monitoring bats, which has been deployed in the Queen Elizabeth Olympic Park, east London.

Technologies for Bioacoustic Sensing

A NERC-funded project to provide end-to-end open-source system for the acoustic monitoring of bat populations at national and international scales.

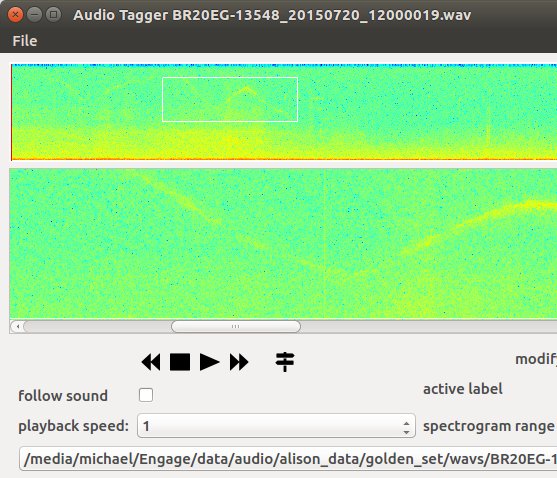

AudioTagger

Computer software we used to label regions of sound files.

Meet the team

Our system is created as part of ENGAGE, an EPSRC grant which has seeded collaborations between computer scientists and data-intensive disciplines which stand to benefit from machine learning.

The people involved in making this particular project happen are:

Alison Fairbrass

Alison is an Engineering Doctoral student at University College London, developing acoustic technology for monitoring biodiversity in the urban environment. She is affiliated with the Centre for Urban Sustainability and Resilience, Center for Biodiversity and Environment Research, and sponsored by the Bat Conservation Trust.

Michael Firman

Michael is a computer science researcher at University College London, who has a keen interest in finding machine learning solutions for ecological problems.

Kate Jones

Kate is a Professor of Ecology and Biodiversity at the Center for Biodiversity and Environment Research (CBER) at UCL. Kate looks at the impact of global change on biodiversity and loves bats!

Helena Titheridge

Helena is a Senior Lecturer in the Department of Civil, Environmental and Geomatic Engineering at UCL, Director of the Centre for Urban Sustainability and Resilience and a member of the Centre for Transport Studies.

Gabriel Brostow

Gabriel Brostow is an Assoc. Prof. in Computer Vision at UCL, specialising in human-in-the-loop machine learning.